A memory leak in node (ActionHero)

actionhero javascript node.js

2016-05-28T18:04:52.000Z

↞ See all posts

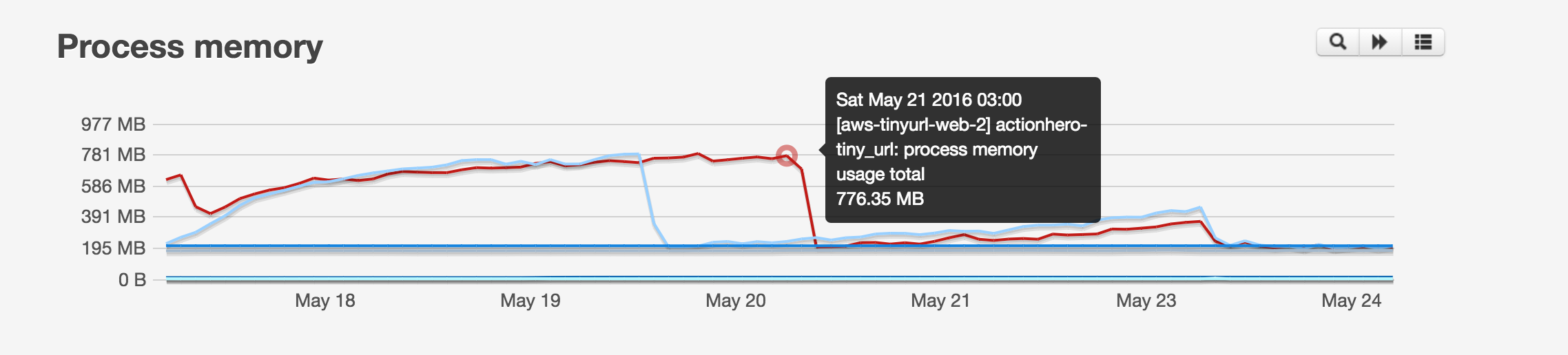

We recently found & solved a memory leak in ActionHero. If you use ActionHero to serve static assets, you should see a significant memory reduction with long-running servers. Yay!

This leak was discovered by the TaskRabbit team when one of their micro-services kept restarting every few days. TaskRabbit uses monit to run all of their applications, and when an app uses too much RAM, it will HUP the app (preforming a graceful restart) and notify the team via PagerDuty:

1# managed by ansible 2 3CHECK PROCESS actionhero-{{ application }} 4 5 WITH PIDFILE /home/{{ deploy_user }}/www/{{ application }}/shared/pids/cluster_pidfile 6 7 START PROGRAM "/bin/bash -c 'source /home/deploy/.profile && cd /home/{{ deploy_user }}/www/{{ application }}/current && HOME=/home/{{ deploy_user }} ./node_modules/.bin/actionhero start cluster --daemon --workers={{ actionhero_workers }}'" 8 as uid {{ deploy_user }} 9 with timeout 30 seconds 10 11 STOP PROGRAM "/bin/bash -c 'kill `cat /home/{{ deploy_user }}/www/{{ application }}/shared/pids/cluster_pidfile`'" 12 as uid {{ deploy_user }} 13 14 if mem is greater than 600 MB for 5 cycles 15 then exec "/bin/bash -c 'kill -s USR2 cat `cat /home/{{ deploy_user }}/www/{{ application }}/shared/pids/cluster_pidfile`'" 16 17 if totalmemory is greater than 800 MB for 10 cycles 18 then exec "/bin/bash -c 'kill -s USR2 cat `cat /home/{{ deploy_user }}/www/{{ application }}/shared/pids/cluster_pidfile`'" 19 20 if mem is greater than 600 MB for 5 cycles 21 then alert 22 else if passed for 3 cycles 23 then alert 24 25 if totalmemory is greater than 800 MB for 10 cycles 26 then alert 27 else if passed for 3 cycles 28 then alert 29 30 if cpu is greater than 25% for 20 cycles 31 then alert 32 else if passed for 3 cycles 33 then alert 34 35 if totalcpu is greater than 90% for 10 cycles 36 then alert 37 else if passed for 3 cycles 38 then alert 39 40 if uptime < 1 minutes for 3 cycles 41 then alert 42 else if passed for 3 cycles 43 then alert

- Note that in Ansible, the `{{ }}` variables are interpolated, and won’t be there in the final file

So what was happening here? This application had previously never served any static files, and was an API endpoint (which runs tr.co). Now, with the introduction of Universal Links in IOS, we are also serving up the typical apple-app-site-association file. To support multiple environments, we actually use an action to serve this file, and return the proper one:

1exports.action = { 2 name: "apple-app-site-association", 3 description: "I return the wacky payload apple needs", 4 5 run: function (api, data, next) { 6 data.connection.sendFile("apple-app-site-association/" + api.env + ".json"); 7 data.toRender = false; 8 next(); 9 }, 10};

1exports.default = { 2 routes: function (api) { 3 return { 4 all: [ 5 { 6 path: "/apple-app-site-association", 7 action: "apple-app-site-association", 8 }, 9 ], 10 }; 11 }, 12};

… and it works fine. However, the only change the application underwent which triggered the memory leak was this action… so there has to be something wrong here.

After scouring the application itself with node-debugger, nothing looked out-of-wack, but adding in a load-test, the problem was certainly reproducible. From there I dove into actionhero’s core and sure enough, sending lots of files, either though the static server or `data.connection.sendFile()` caused a leak :(

After digging around deep in ActionHero’s guts, I narrowed down the problem to the fact that there was an ever-growing number of HTTP connections (an internal object to ActionHero which represents the state of a request) that just never completed. Less than 1% of the connections have this problem, but over time, it would be enough to cause the leak. I decided to see if I could reproduce the problem from scratch, in a simple, 1-file node app… and here is the result:

1var fs = require("fs"); 2var http = require("http"); 3var file = __dirname + "/index.html"; 4var connections = {}; 5var idCouner = 0; 6var port = 8080; 7 8var handleRequset = function (request, response) { 9 idCouner++; 10 var id = idCouner; 11 connections[id] = { req: request, res: response }; 12 13 response.on("finish", function () { 14 delete connections[id]; 15 }); 16 17 fs.stat(file, function (error, stats) { 18 if (error) { 19 throw error; 20 } 21 response.writeHead(200, [["Content-Length", stats.size]]); 22 23 var fileStream = fs.createReadStream(file); 24 fileStream.on("open", function () { 25 fileStream.pipe(response); 26 }); 27 28 fileStream.on("error", function (error) { 29 console.log(error); // no errors are caught 30 }); 31 }); 32}; 33 34http.createServer(handleRequset).listen(port); 35 36console.log("server running on port " + port); 37setInterval(function () { 38 console.log("connections: " + Object.keys(connections)); 39}, 5000);

If you were to run this server, and use the loadtest module against the server:

1npm install loadtest 2 3./node_modules/.bin/loadtest -c 10 — rps 200 http://localhost:8080

You would get a result that a small amount of connection objects are hanging around and never resolved:

1> node server.js 2 3server running on port 8080 4connections: 5connections: 2867 6connections: 2867 7connections: 2867 8connections: 2867 9connections: 2867 10connections: 2867 11connections: 2867 12connections: 2867 13connections: 2867 14connections: 2867,12403 15connections: 2867,12403 16connections: 2867,12403 17connections: 2867,12403 18connections: 2867,12403 19connections: 2867,12403 20connections: 2867,12403 21connections: 2867,12403 22connections: 2867,12403 23connections: 2867,12403 24connections: 2867,12403,22350 25connections: 2867,12403,22350

… and there’s our leak!

Now to figure out why.

After struggling with this for a few days, I threw up my hands and decided this might be a bug in node.js’ core, and created an issue https://github.com/nodejs/node/issues/6929. Luckily, some helpful folks were able to point out my error.

I had been testing for all sorts errors with the file stream… but I was forgetting about errors which might happen with the HTTP connection. If an http connection completes happily, it emits the `finish` event. However, if a response object is prematurely closed (perhaps the client disconnects, has networking trouble, etc), the response object will emit the `close` event instead… an event we were ignoring! This certainly correlates with the behavior we were seeing… a small number of requests might have trouble, disconnect… and ActionHero would never free that connection object.

The relevant sections of ActionHero were fixed in version 13.4.1, and now our connection logic for sending files via HTTP looks like:

1// the ‘finish’ event denotes a successful transfer 2connection.rawConnection.res.on("finish", function () { 3 connection.destroy(); 4}); 5 6// the ‘close’ event denotes a failed transfer, but it is probably the client’s fault 7connection.rawConnection.res.on("close", function () { 8 connection.destroy(); 9});

I write about Technology, Software, and Startups. I use my Product Management, Software Engineering, and Leadership skills to build teams that create world-class digital products.

Get in touch