Update (12/18/2012)

These ideas have now been formally incorporated into the actionHero project. To learn how to launch actionHero in a clustered way, check out the wiki.

Update (12/5/2012)

While other servers also use SIGWINCH to mean "kill all my workers" it’s important to note that this signal is fired when you resize your terminal window (responsive console design anyone?). Be sure that only demonized/background process respond to SIGWINCH!

Introduction

I was asked recently how to deploy actionHero to production. Initially, my naive answer was to simply suggest the use of forever, but that was only a partial solution. Forever is a great package which acts as a sort of Deamon-izer for your projects. It can monitor running apps and restart them, handle stdout/err and logging, etc. It’s a great monitoring solution, but when you say forever restart myApp you will incur some downtime. I’ve spent the past few days working on a full solution.

Footnote — This is a *nix (osx/linux/solaris) answer only. I’m fairly sure this kind of thing won’t work on windows.

At TaskRabbit (a Ruby/Rails Ecosystem) we have put in a lot of effort into "properly" implementing Capistrano and Unicorn so that we can have 0-downtime deployment. This is integral to our culture, and allows us to deploy worry-free a number of times each day. This also makes the code-delta in our deployments smaller, and therefore less risky (saying nothing of the value in reducing the time it takes to launch new features). 0-downtime deployments are good.

Framework

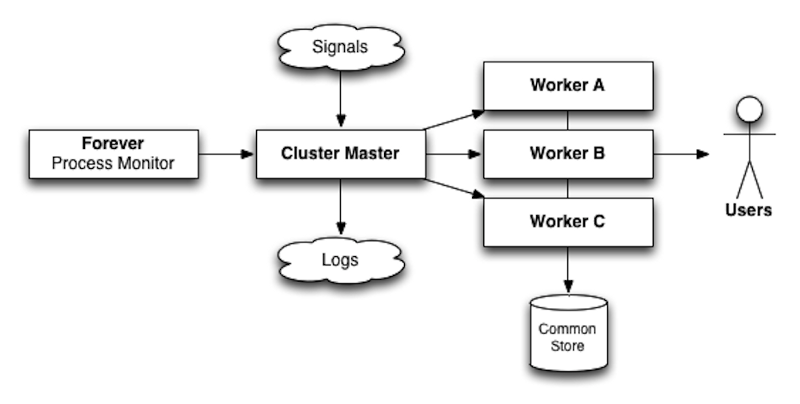

Ok, so how to make a 0-downtime node deployment? Forever is certainly part of the solution, but the meat of the answer lies in the node.js cluster module (and how awesome node is at being unix-y).

The cluster module allows one node process to spawn children and share open recourses with them. This might include file pointers, but in our case, we are going to share open ports and unix sockets. In a nutshell, if you have one worker open port 80, other workers can also listen on port 80. The cluster module will share the load between all available workers.

The cluster module is usually approached as a way to load balance an application (and it’s great at that), but it also can be used as a way to hand over an open connection from one worker to another. In this way, we can tell one worker to die off while another is starting. With enough workers running (and some basic callbacks), we can ensure that there is always a worker around to handle any incoming requests

Application Considerations

This is some core node magic right here. Whether you have created an HTTP server or a direct TCP server, the default behavior of server.close() is actually quite graceful by default. Check out the docs and you sill see that the server will close, but not kick out any existing connections, and finally when all clients have left, a callback is fired. We will be waiting for this callback to know that it is safe to close out our server.

For an HTTP server this is pretty straight forward: no new connections will be allowed in, and any long-lasting connections will have the chance to finish. In our cluster setup, that means that any new connections that come in during this time will be passed to another worker… exactly what we want! (note: it’s possible that a connection might not ever finish, but that’s out of scope for this discussion)

Raw TCP connections are another matter. The server behaves the same way, but TCP connections never really expire, so if we don’t kick out existing connections, the server will never exit. Take a look at this snippit of code from actionHero’s socketServer:

1api.socketServer.gracefulShutdown = function (api, next, alreadyShutdown) { 2 if (alreadyShutdown == null) { 3 alreadyShutdown = false; 4 } 5 if (alreadyShutdown == false) { 6 api.socketServer.server.close(); 7 alreadyShutdown = true; 8 } 9 for (var i in api.socketServer.connections) { 10 var connection = api.socketServer.connections[i]; 11 if (connection.responsesWaitingCount == 0) { 12 api.socketServer.connections[i].end("Server going down NOW\r\nBye!\r\n"); 13 } 14 } 15 if (api.socketServer.connections.length != 0) { 16 api.log( 17 "[socket] waiting on shutdown, there are still " + 18 api.socketServer.connections.length + 19 " connected clients waiting on a response", 20 ); 21 setTimeout(function () { 22 api.socketServer.gracefulShutdown(api, next, alreadyShutdown); 23 }, 3000); 24 } else { 25 next(); 26 } 27};

In the part of the TCP server that handles incoming requests, we increment the connection’s connection.responseWaitingCount and when the action completes and the response is sent to the client, we decrement it. This way we can approximate the client is "waiting for a response" or not. It’s important to remember that TCP clients can request many actions at the same time (unlike HTTP, where each request can only ever have one response). Note that once a client is deemed fit to disconnect we send a ‘goodbye’ message. The client then is responsible for reconnecting, and they will come back and connect to another worker.

WebSockets work the same way as the TCP server does. Once we disconnect each client, they will reconnect to a new worker node, as the old one has stopped taking connections. socket.io’s browser code is very well written and will reconnect and retry any commands that have failed. socket.io binds to the http server we talked about earlier, so shutting it down will also disconnect all websocket clients.

Now that we have servers that gracefully shut down, how do we use them?

The Cluster Leader

The reason for gracefully disconnecting each client was that we are not going to restart each server, but rather kill it entirely and create a new one. Creating a new worker ensures that each process will load in any new code and have a fresh environment to work within.

The Cluster leader has a few main goals:

- Sharing Resources. We get this for free from the node.js cluster module

- Worker management. This includes restarting them when they fail along with responding to requests to start/stop them

- Responding to Signals. We’ll cover this in a moment, but essentially this is you you communicate with the leader once he is up and running.

- Logging. You gotta’ log the state of your cluster!

Sharing Resources:

As mentioned before, open sockets and ports can be shared (for free) by all children in the cluster. Yay node!

Worker Managment:

The cluster module provides a message passing interface between leader and follower. You can pass anything that can be JSON.stringified (no pointers). We can use these methods to be aware of when a booted worker is ready to accept connections, and conversely, we can tell a worker to begin its graceful shut down process (rather than outright killing the process). Take a look at the worker code at the bottom of the article for more details. Note the use of process.send(msg) within the callbacks for actionHero.start() and actionHero.stop().

Responding to Signals:

Unix signals are the classy way to communicate with a running application. You send them with the kill command, and each signal has a common meaning:

- SIGINT / SIGTERM / SIGKILL Kill the running process, with various degrees of severity. In our application we will treat these all as meaning "kill the leader and all of his workers"

- SIGUSR2 Tell the leader to reload the configuration of his workers. In our cluster, this will mean a rolling restart of each worker one-by-one.

- SIGHUP reload all workers. For us, this will mean instantaneously kill off all workers and start new ones (will lead to potential downtime)

- SIGWINCH kill off all workers

- TTIN / TTOU add a worker / remove a worker

So if you wanted to tell the leader to stop all of his workers (and his pid was 123), you would run kill -s WINCH 123

USR2 is the most interesting case here. While there are ways to "reload configuration" in a running node.js app (flush the module cache, reload all source modules, etc), it’s usually a lot safer just to start up a new app from scratch. I say that we are going to do a "rolling restart" because we literally are going to kill off the first worker, spawn a new one, and repeat. Assuming we have 2 or more workers, this means that there will always be at least one worker around to handle requests. Now this might lead to problems where some workers have an old version of your codebase and some workers have a new version, but usually that is desirable when compared with outright downtime. Oh, and try not to have more workers than you have CPUs!

The main function in charge of these "rolling restarts" is here:

1var reloadAWorker = function (next) { 2 var count = 0; 3 for (var i in cluster.workers) { 4 count++; 5 } 6 if (workersExpected > count) { 7 startAWorker(); 8 } 9 if (workerRestartArray.length > 0) { 10 var worker = workerRestartArray.pop(); 11 worker.send("stop"); 12 } 13}; 14 15cluster.on("exit", function (worker, code, signal) { 16 log("worker " + worker.process.pid + " (#" + worker.id + ") has exited"); 17 setTimeout(reloadAWorker, 1000); // to prevent CPU-splsions if crashing too fast 18});

When we initialize a rolling restart, we add all workers to the workerRestartArray, and then one-by-one they will be dropped. Note that on every worker’s exit, we run reloadAWorker(). This also ensures that if a worker died due to an error, we will start another one in its place (workersExpected is modified by TTIN and TTOU). The reason for the timeOut is to ensure that if a worker is crashing on boot (perhaps it can’t connect to your database) that the leader isn’t restarting workers are fast as possible… as this would probably lock up your machine.

Deployment Notes

- While your workers will load up new code changes on restart, the cluster leader will not. Unfortunately, you will need to restart it to catch any code changes. Luckily, you can use forever for this to do it quickly. When you restart the leader all of his workers will die off.

- Building from the earlier comment, child process die when the parent dies. That’s just how it is (usually). That means that if for any reason the leader dies (kill, ctrl-c, etc), all of the workers will die too. That’s why it is best to keep the leader’s code base as simple as possible (and separate from the workers). This is also why we use something like forever to monitor it’s uptime and restart it if anything goes wrong

- I’ve talked about using Capistrano to deploy node.js applications before, but there are lots of methods to get your code on the server (fabric, chef, github post-commit hooks, etc). Once your leader is up and running, your deployment really only looks like 1) git pull 2) kill -s USR2 (pid of leader). Yay!

Code

Here is the state of actionHero’s cluster leader code at the time of this post. It’s likely to keep evolving, so you can always check out the latest version on GitHub

Follower

1#!/usr/bin/env node 2 3// load in the actionHero class 4var actionHero = require(__dirname + "/../api.js").actionHero; // normally if installed by npm: var actionHero = require("actionHero").actionHero; 5var cluster = require("cluster"); 6 7// if there is no config.js file in the application's root, then actionHero will load in a collection of default params. You can overwrite them with params.configChanges 8var params = {}; 9params.configChanges = {}; 10 11// any additional functions you might wish to define to be globally accessable can be added as part of params.initFunction. The api object will be availalbe. 12params.initFunction = function (api, next) { 13 next(); 14}; 15 16// start the server! 17var startServer = function (next) { 18 if (cluster.isWorker) { 19 process.send("starting"); 20 } 21 actionHero.start(params, function (api_from_callback) { 22 api = api_from_callback; 23 api.log("Boot Sucessful @ worker #" + process.pid, "green"); 24 if (typeof next == "function") { 25 if (cluster.isWorker) { 26 process.send("started"); 27 } 28 next(api); 29 } 30 }); 31}; 32 33// handle signals from leader if running in cluster 34if (cluster.isWorker) { 35 process.on("message", function (msg) { 36 if (msg == "start") { 37 process.send("starting"); 38 startServer(function () { 39 process.send("started"); 40 }); 41 } 42 if (msg == "stop") { 43 process.send("stopping"); 44 actionHero.stop(function () { 45 api = null; 46 process.send("stopped"); 47 process.exit(); 48 }); 49 } 50 if (msg == "restart") { 51 process.send("restarting"); 52 actionHero.restart(function (success, api_from_callback) { 53 api = api_from_callback; 54 process.send("restarted"); 55 }); 56 } 57 }); 58} 59 60// start the server! 61startServer(function (api) { 62 api.log("Successfully Booted!", ["green", "bold"]); 63});

Leader

1#!/usr/bin/env node 2 3////////////////////////////////////////////////////////////////////////////////////////////////////// 4// 5// TO START IN CONSOLE: `./scripts/actionHeroCluster` 6// TO DAMEONIZE: `forever start scripts/actionHeroCluster` 7// 8// ** Producton-ready actionHero cluster example ** 9// - workers which die will be restarted 10// - maser/manager specific logging 11// - pidfile for leader 12// - USR2 restarts (graceful reload of workers while handling requets) 13// -- Note, socket/websocket clients will be disconnected, but there will always be a worker to handle them 14// -- HTTP, HTTPS, and TCP clients will be allowed to finish the action they are working on before the server goes down 15// - TTOU and TTIN signals to subtract/add workers 16// - WINCH to stop all workers 17// - TCP, HTTP(s), and Web-socket clients will all be shared across the cluster 18// - Can be run as a daemon or in-console 19// -- Lazy Dameon: `nohup ./scripts/actionHeroCluster &` 20// -- you may want to explore `forever` as a dameonizing option 21// 22// * Setting process titles does not work on windows or OSX 23// 24// This example was heavily inspired by Ruby Unicorns [[ http://unicorn.bogomips.org/ ]] 25// 26////////////////////////////////////////////////////////////////////////////////////////////////////// 27 28////////////// 29// Includes // 30////////////// 31 32var fs = require("fs"); 33var cluster = require("cluster"); 34var colors = require("colors"); 35 36var numCPUs = require("os").cpus().length; 37var numWorkers = numCPUs - 2; 38if (numWorkers < 2) { 39 numWorkers = 2; 40} 41 42//////////// 43// config // 44//////////// 45 46var config = { 47 // script for workers to run (You probably will be changing this) 48 exec: __dirname + "/actionHero", 49 workers: numWorkers, 50 pidfile: "./cluster_pidfile", 51 log: process.cwd() + "/log/cluster.log", 52 title: "actionHero-leader", 53 workerTitlePrefix: " actionHero-worker", 54 silent: true, // don't pass stdout/err to the leader 55}; 56 57///////// 58// Log // 59///////// 60 61var logHandle = fs.createWriteStream(config.log, { flags: "a" }); 62var log = function (msg, col) { 63 var sqlDateTime = function (time) { 64 if (time == null) { 65 time = new Date(); 66 } 67 var dateStr = 68 padDateDoubleStr(time.getFullYear()) + 69 "-" + 70 padDateDoubleStr(1 + time.getMonth()) + 71 "-" + 72 padDateDoubleStr(time.getDate()) + 73 " " + 74 padDateDoubleStr(time.getHours()) + 75 ":" + 76 padDateDoubleStr(time.getMinutes()) + 77 ":" + 78 padDateDoubleStr(time.getSeconds()); 79 return dateStr; 80 }; 81 82 var padDateDoubleStr = function (i) { 83 return i < 10 ? "0" + i : "" + i; 84 }; 85 msg = sqlDateTime() + " | " + msg; 86 logHandle.write(msg + "\r\n"); 87 if (typeof col == "string") { 88 col = [col]; 89 } 90 for (var i in col) { 91 msg = colors[col[i]](msg); 92 } 93 console.log(msg); 94}; 95 96////////// // Main // ////////// 97log(" - STARTING CLUSTER -", ["bold", "green"]); 98// set pidFile 99if (config.pidfile != null) { 100 fs.writeFileSync(config.pidfile, process.pid.toString()); 101} 102process.stdin.resume(); 103process.title = config.title; 104var workerRestartArray = []; 105 106// used to trask rolling restarts of workers 107var workersExpected = 0; 108 109// signals 110process.on("SIGINT", function () { 111 log("Signal: SIGINT"); 112 workersExpected = 0; 113 setupShutdown(); 114}); 115 116process.on("SIGTERM", function () { 117 log("Signal: SIGTERM"); 118 workersExpected = 0; 119 setupShutdown(); 120}); 121 122process.on("SIGKILL", function () { 123 log("Signal: SIGKILL"); 124 workersExpected = 0; 125 setupShutdown(); 126}); 127 128process.on("SIGUSR2", function () { 129 log("Signal: SIGUSR2"); 130 log("swap out new workers one-by-one"); 131 workerRestartArray = []; 132 for (var i in cluster.workers) { 133 workerRestartArray.push(cluster.workers[i]); 134 } 135 reloadAWorker(); 136}); 137 138process.on("SIGHUP", function () { 139 log("Signal: SIGHUP"); 140 log("reload all workers now"); 141 for (var i in cluster.workers) { 142 var worker = cluster.workers[i]; 143 worker.send("restart"); 144 } 145}); 146 147process.on("SIGWINCH", function () { 148 log("Signal: SIGWINCH"); 149 log("stop all workers"); 150 workersExpected = 0; 151 for (var i in cluster.workers) { 152 var worker = cluster.workers[i]; 153 worker.send("stop"); 154 } 155}); 156 157process.on("SIGTTIN", function () { 158 log("Signal: SIGTTIN"); 159 log("add a worker"); 160 workersExpected++; 161 startAWorker(); 162}); 163 164process.on("SIGTTOU", function () { 165 log("Signal: SIGTTOU"); 166 log("remove a worker"); 167 workersExpected--; 168 for (var i in cluster.workers) { 169 var worker = cluster.workers[i]; 170 worker.send("stop"); 171 break; 172 } 173}); 174 175process.on("exit", function () { 176 workersExpected = 0; 177 log("Bye!"); 178}); 179 180// signal helpers 181var startAWorker = function () { 182 worker = cluster.fork(); 183 log("starting worker #" + worker.id); 184 worker.on("message", function (message) { 185 if (worker.state != "none") { 186 log("Message [" + worker.process.pid + "]: " + message); 187 } 188 }); 189}; 190 191var setupShutdown = function () { 192 log("Cluster manager quitting", "red"); 193 log("Stopping each worker..."); 194 for (var i in cluster.workers) { 195 cluster.workers[i].send("stop"); 196 } 197 setTimeout(loopUntilNoWorkers, 1000); 198}; 199 200var loopUntilNoWorkers = function () { 201 if (cluster.workers.length > 0) { 202 log("there are still " + cluster.workers.length + " workers..."); 203 setTimeout(loopUntilNoWorkers, 1000); 204 } else { 205 log("all workers gone"); 206 if (config.pidfile != null) { 207 fs.unlinkSync(config.pidfile); 208 } 209 process.exit(); 210 } 211}; 212 213var reloadAWorker = function (next) { 214 var count = 0; 215 for (var i in cluster.workers) { 216 count++; 217 } 218 if (workersExpected > count) { 219 startAWorker(); 220 } 221 if (workerRestartArray.length > 0) { 222 var worker = workerRestartArray.pop(); 223 worker.send("stop"); 224 } 225}; 226 227// Fork it. 228cluster.setupleader({ 229 exec: config.exec, 230 args: process.argv.slice(2), 231 silent: config.silent, 232}); 233for (var i = 0; i < config.workers; i++) { 234 workersExpected++; 235 startAWorker(); 236} 237cluster.on("fork", function (worker) { 238 log("worker " + worker.process.pid + " (#" + worker.id + ") has spawned"); 239}); 240cluster.on("listening", function (worker, address) {}); 241cluster.on("exit", function (worker, code, signal) { 242 log("worker " + worker.process.pid + " (#" + worker.id + ") has exited"); 243 setTimeout(reloadAWorker, 1000); // to prevent CPU-splsions if crashing too fast 244});

Enjoy!

I write about Technology, Software, and Startups. I use my Product Management, Software Engineering, and Leadership skills to build teams that create world-class digital products.

Get in touch